Different pipelines for the masses

If you work in the IT industry long enough, you start to see messaging or wording of items to repeat itself. In the case, we are going to take a look at the term “pipeline”. But what exactly is a “pipeline”? What is its purpose? How many different variations are there? In this post, we will take a look at the different types of pipelines there seems to be within the IT industry.

What is a Pipeline?

Data Pipeline

In a general sense, a pipeline is a series of operations that are chained together to accomplish a goal. The output of one operation becomes the input of the next operation, until the desired goal is reached. This process can be visualized as a series of pipelines interconnected where data flows from one to the next; along the way data is either quickly moved or manupliated along the way.

For example, suppose you want to move data from database A (source) to Kafka (target). The pipeline would look like:

- Capture all changed data/transactions

- Ship data/transactions

- Apply data/transactions to Kafka topic

This is an over simplified example of a pipeline that is used to move data from a relational databases to a publication platform; yet it is considered a data pipeline. These types of “pipelines” can be chained together to build a data fabric or data mesh. Tools like Oracle GoldenGate or Oracle Cloud Infrastructure GoldenGate (OCI GoldenGate) can be used to build these pipelines between heterogenous platforms, on-premises and across clouds.

Two types of Data Pipelines

Batch Processing

Batch processing is the most common data pipleline. This was a critical type of pipeline in the early years of the IT industry and enabled organizations to move large amounts of data from one system to another. This type of pipeline is not essential for analtyics, yet it is typically associated with ETL/ELT processes. In many cases, when batch processing is used timing of the execution is not critcal and often happens at night.

Stream Processing

Stream processing is starting to become the standard in the IT industry today. Through stream processing data is continously updated based on changes that occur between systems; also known as events. Data that is processed through this type of pipeline is stored in topic and can be subscribed to by outside system. Enabling quicker ingestion of data for organizational use.

Elements of a Data Pipeline

CI/CD Pipeline

A Continous Integration/Continous Delivery (CI/CD) pipeline automates software delievery process from a software delievery point-of-view. This type of pipeline does the following:

- Builds code

- Runs tests (unit tests, QA tests, etc.) (CI)

- Deploys new versions of the application (CD)

By building CI/CD pipelines, the building and delievery of applications are automated, removes manual errors, provide standard feedback to developers, and enables faster product iterations.

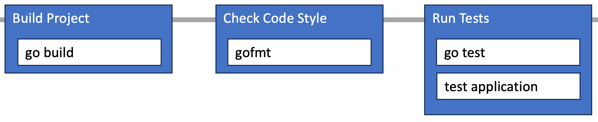

Elements of a CI/CD Pipeline

A CI/CD pipeline may seem to be more of an overhead process, but it is not. It should be viewed as a runnable specification of steps needed to deliver a software package between versions of releases. Without a CI/CD pipeline, developers and systems administrators would still need to perform the same steps in a manual process, hence being less productive.

Most CI/CD pipeliens typically have the following stages:

Faiure through any of the stages typically triggers a notification to let the responsible parties know about the cause. Otherwise, the only. notifications are sent after each successful deployment of the application.

Machine Learning Pipelines

If we take a look at Python with the Scikit-Learn packages, pipelining is used with machine learning processing and resolve issues like data leakage in testing setups. Pipelines, in this case, function by allwoing linear series of data transformations to be linked together resulting in a measurable modeling process. The objective is to gurantee that all phase within a pipeline are limited to the data available within the pipeline.

The scikit-learn packages provides built-in functions for building pipelines (sklearn.pipeline & sklearn.make_pipeline), which simplifies the pipeline construction.

A typical pipeline for Machine Learning using python with the scikit-learn package may look like the following:

- Loading Data

- Data Preprocessing

- Splitting of data

- Transformations

- Predictions and Evaluations

The below image illistrates what the pipelien looks like in concept:

AI Pipelines

AI pipelines or machine learning pipelines (above) are interconnected or streamlined collection of operations. As data works though machine learning systems, the data is stored in collections and used to train models. Essentially, AI pipelines are “workflows” or interactive paths through which data moves through a machine learning platform.

An AI pipeline/workflow is generallly made up of the following:

- Data Ingestion

- Data Cleaning

- Preprocessing

- Modeling

- Deployment

The AI pipeline (workflow) moves information from collection to collection until it reaches the final deployment and represents an iterative process that continously feeds new information to machine learning/AI systems for AIs to learn and process.

How does ML Pipelines share AI Pipelines?

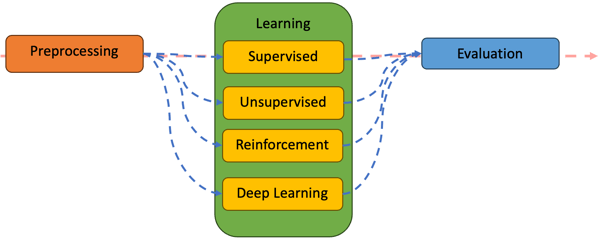

Understanding what AI Pipelines are, getting an understanding of what is happening is benefitical. There are seveal stages that AI has to work through as part of the “learning” process. These stages are:

- Preprocessing

- Learning

- Evaluation

- Prediction

Each one of these stages are used by an AI as follows:

Preprocessing – are the steps and methods that Machine Learning performs during initial modeling. These sub-steps include cleaning data, structuring data, and preparing it for AI learning

Learning – is comprised of different models that are generated by Machine Learning algorithms. These different models are:

- Supervised Learning – providing machine learning algorithms with examples of expected output

- Unsupervised Learning – machine learning algorithms use data sets to learn about the inherent patterns in the data and how to best use the data for a specific task

- Reinforement Learning – action-and-reward teaching

- Deep Learning – teaching that uses layers of neural networks to facilitae machine learning for complext tasks like patter recognition for physical systems, facial and image recognition

Evaluation – is driven by a “trained” brain that was created by machine learning algrothms and evaluated against data provided by the end user. At this stage, the AI is execpting to recieve information from the user that matches what the AI has been trained with.

The below images illstrates what an AI pipeline looks like in concept:

Summary

In this artical, we learned that there a few different types of pipelines and that the industry term of “pipeline” can be used for many different topics and discussions. Although the term “pipeline” has become synonomous with many different processes, the core concept is still conveying data through a series of steps while acting upon that data in some way. As the industry and organizations begin to transition into more into the AI/LLM space, having a firm understanding of what type of pipelines are used will benefit and enable organizations to adopt new technolgoies and processes.